June 29, 2018 / cpstobart / 0 Comments

Past, practice and specimen papers are done by all of us. It is a tried and tested means of getting students used to question style, wording, topic mixture and many other aspects of the exam. Sometimes I feel that we can give students past paper exhaustion – that they are ‘past-papered-out’ by the time the actual exam comes around.

This year we tried to look at how we could use some of last year’s papers in a more structured and reflective way. In April we decide to use one of them as a mock exam (and as a reality check) but to analyse the results in a different way.

I am an active Twitter user (@cpstobart on education related topics only) and there are a number of inspirational maths leaders and teachers out there. Via weekly Twitter conversations at #mathscpdchat and #mathschat, which I join in when I can constructively contribute, ideas are suggested and developed.

I was particularly interested in one idea which required recording the mark gained for every part question for every student. Putting these into a spreadsheet which is colour coded for the marks gained, presented an amazing map of accuracy and understanding.

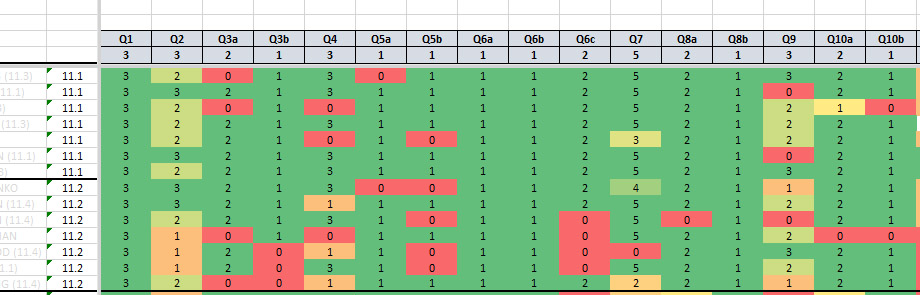

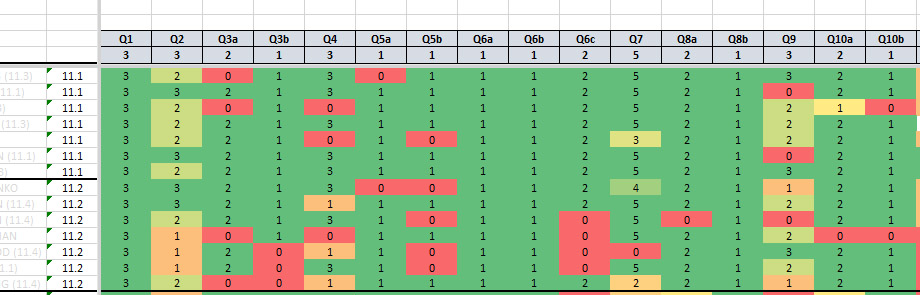

Here is a portion of the ‘map’:

The header rows tell you the Question number and the marks available. Each following row is the set of marks gained by each student, and each column a part question. Colours range from green (full marks) to red (no marks).

The question for us is, What can we do with this information?

We can identify which questions were done well by virtually all students (topics do not need revision) and some which were done poorly by many students (intervention required). We could also see where students had started a question successfully but then did not manage to follow through to a second or third part – why not?

Initially we had thought that we could rearrange students into new groups where students that had the same problem topic could come together for a couple of lessons, then rearrange again, and again. This would provide better managed and targeted revision.

Although this would have been a very good exercise what we discovered was that there were a few part questions where everyone performed poorly. This meant we could keep the classes as normal and tackle the same problem in every group.

The difficulties arose from interpreting and decoding questions successfully. On closer inspection we discovered questions where particular wording had been the problem.

This could have been an unusual context that the question was set in, specific mathematical vocabulary that is muddled, or a cultural misunderstanding. It was surprising how widespread some of the problems turned out to be – but we would not have been able to identify them without this expansive overview.

In many instances, once vocabulary issues were rectified solutions were then successfully found without any further help needed. This does highlight, for us, the importance of vocabulary and context. While we do make extensive use of student word banks we can never relax our efforts to ensure that they are regularly updated. For many students, just a small amount of help resulted in big rewards because a question was suddenly unlocked.

Was this a useful exercise? Without doubt. We had a preconceived idea about what we were going to do but the data took us in another direction along a route that was, ultimately, better than our original idea.

Would we do this again? Absolutely. Having the overall map of student achievement by part question is a terrific snapshot of their levels of understanding. It offers suggestions about the type of intervention that can be usefully employed to clear up misconceptions and deepen understanding.

October 29, 2016 / cpstobart / 0 Comments

Creating the schemes of work for the new 9-1 syllabus has not been an easy task. We had, of course, mapped out the whole two years for the start of last year when the then Y10 we starting, but we didn’t know how things would map out.

I think we did a pretty good job with students as Y10 and we haven’t needed to tweak the Y10 scheme very much from 2015/16 to 2016/17. We did find that we could play a little bit with the original version of the Y11 plan. When we came to the end of June we found that we had actually done more than we had planned. This meant that we could build in a few revision weeks.

We also do the GCSE in 1 year. Squeezing everything into 25 or so weeks is pretty tough. The only way we can be sure of managing this is to employ the strategy of flipped lessons and independent learning. We have to assume a great deal of prior learning has happened somewhere in our students’ lives and build study sessions and homework around that assumption. We chose to really pile this into term 1 so that we have breathing space starting in January – where our mock exams also kick in.

So far, after the first half term things are going reasonably well to plan. Inevitably it is the sets where we have weaker students that a little ground has been lost, but with that study/ homework time freed up next term we can claw some of that.

Our schemes of work are here in .pdf

Y10 scheme

Y11 scheme

GCSE maths in one year

If this helps you to look at how to pack things in, great.

Happy planning.

Colin

July 20, 2015 / cpstobart / 0 Comments

Having just completed (apart from the tidying up, query and edit process) the authoring of a collection of materials to accompany the new Collins 4th edition Mathematics GCSE Foundation student book, it is apparent that AO3 has really become a focus of the new changes.

Having just completed (apart from the tidying up, query and edit process) the authoring of a collection of materials to accompany the new Collins 4th edition Mathematics GCSE Foundation student book, it is apparent that AO3 has really become a focus of the new changes.

The brief required, for each chapter of the student book, a context based problem that “will help to build students skills and confidence for tackling (AO3) problem-solving questions.” When these are made-up into a video there will be a worked solution with audio commentary that talks the students through a suitable strategy for tackling the question and the skills needed to solve it.

There was a need to offer an evaluation opportunity, so that students could be prompted to evaluate the method used and the answer obtained. There also needed to be an opportunity to consider whether alternative methods could have been used.

The difficult task was not in creating contexts in which to locate problems, but thinking of ways in which to incorporate the syllabus requirements embedded in AO3, that students should be able to:

- interpret results in the context of the given problem

- evaluate methods used and results obtained

- evaluate solutions to identify how they may have been affected by assumptions made.

These will, within questions at Foundation Tier, make life difficult for students.

In the past, a question looking at different methods for purchasing something – a deposit of varying percentages followed by differing monthly payments – would require an evaluation of the results, but not the method used. Previously students have encountered questions that ask them to explain, show, prove but not to also incorporate comments about assumptions that they have made, or indeed a comment about the method they have used.

On top of this, more questions are going to be set in a context from which students will be required to extract information rather than be presented with a rather stark equation to solve or diagram asking them to workout x. So not only is there a requirement to interpret results, they have to interpret the question accurately as well.

This is going to present huge challenges if, like me, you work with students whose first language is not English, or students who maybe have poor literacy skills.

So what kind of questions can be expected, and how are they to be answered.

For example, when looking at properties of number I had a scenario involving three looping tracks in a model railway, circuit times were 18, 20 and 28 seconds. Part of the question involved finding the highest common factors of these numbers (1260 seconds or 21 minutes). The most efficient method is to use prime factor form (arrived at by different methods) but students could have started producing strings of multiples – an agonisingly long process, 18 would have a list of 70 multiples before arriving at 1260. For this part of the question an evaluation of method is possible, comparing the use of prime factors with the use of lists of multiples.

When examining direct proportion we are all used to questions such as, 3 men take 6 days to build a wall. How many days would it take 4 men to build the same wall? We would expect most of our students to come up with an answer of 4½ days, but would we also expect them to add that they assumed that the length of the working day was the same in each case, or that the 4 men worked at the same rate as the 3 men?

Questions may contain phrases such as, Show how this is possible. This is the clue to an evaluative solution being required. Not only do students need to produce a numerical answer, they then have to comment on that result, such as making a comparison of the result against an alternative. The question could also be one of comparing a method, for example, simple percentage change against repeated percentage change.

Because of context setting it is clear that students will have fewer clues as to the solution from the question itself – simple percentage change and repeated percentage change solutions might be triggered in a student’s mind by key words such as bank account, percentage interest, number of years invested. For example, I used the declining numbers in a wildlife population to cover this topic and, after calculating a population in a given year, ask students to evaluate models that two bird watchers had used to arrive at a forecast of population numbers. Students need to carefully consider the nature of the problem, devise a strategy, communicate the developing solution, and provide an evaluation with summary conclusion. The wording of questions will not immediately signal the method of solution.

We might conclude that from now on there is a good deal more narration expected.

Communicating method, describing the steps taken, clearly explaining the significance of a solution; these are the skills being extended under the new syllabus and embedded in assessment objective AO3.

June 5, 2015 / cpstobart / 0 Comments

The Twitter storm that erupted last evening was amazing. Thanks to Hannah and her sweets, plus 180,000 (and counting) tweets to #EdexcelMaths, the grim details of the examination has reached into the homes of every listener of the Chris Evans Radio 2 Breakfast Show, and all BBC/ITV news watchers. I was on Twitter last evening and post were coming in faster than you could scroll down the page.

The Twitter storm that erupted last evening was amazing. Thanks to Hannah and her sweets, plus 180,000 (and counting) tweets to #EdexcelMaths, the grim details of the examination has reached into the homes of every listener of the Chris Evans Radio 2 Breakfast Show, and all BBC/ITV news watchers. I was on Twitter last evening and post were coming in faster than you could scroll down the page.

So what was being said? 99% of tweets were from disgruntled students who were expressing their anger and grief in ways both funny and vitriolic. By this morning the tide was turning with a healthy percentage of tweets asking what all the fuss was about. The paper is written to cater for students of middling ability up to those gifted students eager to move on to study at A level, and so is it not to be expected that there will be some students that find some of the questions on the paper more challenging or even no-go areas?

I had a read through the examination paper at the time and was pleased with the range of question topics, their setting/context and their degree of difficulty. I did not turn the page and stare in disbelief at a question that looked like it had migrated from an A2 paper. Yes, there were some tricky questions, and yes there were some pleasing twists to the expected questions, but none that deserve the furore that has mushroomed over that past 24 hours.

One tweet I did read challenged the methods by which students are taught. This is a good point. There is often a strong emphasis on doing mountains of past papers and learning the mark scheme – in other words preparing students for a particular style of question and learning how to answer in a particular way using particular key word/phrases. Of course, working through past papers is an invaluable exercise – we need to let students see the style of the papers, get a feel for the way in which questions are asked and see why marks are allocated. After all, this is one reason for doing mock exams.

BUT…. to do this at the expense of encouraging creativity and developing the skills of problem solving (any problem, not just particular ones), to hold back from letting students explore and evaluate?

This is partially why the new GCSE starting in September for Y10 students will be such a change for many students (and teachers). In a blog post I wrote for the Collins Maths Festival in March I said that a key feature of the new syllabus is that the Assessment Objectives (especially AO2) have changed. The linear structure of the course turns out to be, in fact, essentially spiral – requiring the revisiting of topics, gradually building on them. Students are going to be presented with questions that are more demanding and challenging. Questions are going to be set with less directed structure, making them more open ended, and problem solving will require students to use different strands of maths for solutions. Instead of the question asking students to do part a), then do b) and use the answers from a) and b) in c), students will need to find their own way through the question. Clearly, students will need to have grasped the fundamentals of maths in order to lead on to a higher level of maths achievement.

And so as far as Hannah and her sweets are concerned the problem was not that the question was unfairly difficult, it was because many students seem not to have been encouraged to explore and develop a line of reasoning. Students need to be encouraged to collaborate, discuss and explore in order to develop a better understanding of the connection of a variety of strands. It is essential that students, especially at the Higher Tier, develop reasoning, improve their ability to make inferences and deductions, and feel confident enough to challenge the validity of an argument.

Oh, yeah…… before Hannah felt hungry, there were 10 sweets in the bag, 6 orange and 4 yellow.

May 9, 2015 / cpstobart / 0 Comments

Just taken delivery of my newly co-authored Teacher Pack for the Higher Tier which was published on the 30 April. Looks great. I’m really proud of this resource. Look at my books page, and get your order in at Amazon. Thanks.

Just taken delivery of my newly co-authored Teacher Pack for the Higher Tier which was published on the 30 April. Looks great. I’m really proud of this resource. Look at my books page, and get your order in at Amazon. Thanks.

May 6, 2015 / cpstobart / 0 Comments

This thought provoking blog says something about the way that we tend to view the schooling that some of our overseas students grow up with. The school I work at has its entire intake from overseas, and is 100% boarding. Our two major client bases are Russia (and surrounding -istans) and China.

This thought provoking blog says something about the way that we tend to view the schooling that some of our overseas students grow up with. The school I work at has its entire intake from overseas, and is 100% boarding. Our two major client bases are Russia (and surrounding -istans) and China.

Chinese students are great with algebra and will solve problems until sleep overtakes them: everything must be expressed as an equation; elegant solutions are essential. Working with graph space is another matter, however, and anything that involves drawing is a struggle. Russian students also have their strengths and blind spots: they love discriminants. I realise that these are generalisations but I see this year after year as new cohorts of students arrive.

Rote learning has been a part of the lives of these students for many years, solving particular problems, getting used to specific formats. What is often lacking is the ability to identify or devise a method of solution. The questioning, evaluative skills that we value need to be developed, often with great resistance. Their background is one of passive learning where asking questions beyond clarification is not expected. The silence in the classroom is a result this ‘soaking’ up of the teacher’s mastery and the stifling of the inquisitiveness of the student’s mind – taking away the mystery of discovery, the adventure of independent learning.

I was reading an article in the ‘Time’ magazine a week of so ago about the nature of the Chinese system and the route to top American universities. In itself this was an excellent article but what really sent my own imagination running was the accompanying photograph which showed hundreds upon hundreds of students sat at exam desks in a huge outdoor playground. Row upon row; repetition; rote learning.

At this exam time I tell my students that they should not practise until they can do something right, they should practise until they will not get it wrong. Am I guilty of the things I am highlighting as a failure in other systems? Not if I have prepared the way correctly in the lead up to this period, not if I have sparked or reignited that enquiring mind.

Other systems do have fantastic elements and I am very often grateful for the work ethic that I find in students. I do not want, however, to return to the days where I would have been known as the ‘Maths Master’.

May 1, 2015 / cpstobart / 0 Comments

Started a conversation with Julia at Revision Buddies about planning the revision of the cool maths GCSE revision app that I had provided content for. If you have followed the blogs here and the ones I wrote for the Collins Online Maths Festival last month you will have an appreciation of the changes that are coming.

Started a conversation with Julia at Revision Buddies about planning the revision of the cool maths GCSE revision app that I had provided content for. If you have followed the blogs here and the ones I wrote for the Collins Online Maths Festival last month you will have an appreciation of the changes that are coming.

Watch this space!

April 7, 2015 / cpstobart / 0 Comments

I was asked a few days ago about the suggestion in my blogs for the Collins Online Maths Festival that we should be introducing mini-investigations. I see these as a way of bringing together a number of syllabus strands so that students can start to make connections between the various topics that they have been studying. This is not just a means to get some revision into the scheme of work but an attempt to encourage and develop the skills connected to the logical presentation of solutions and the independent development of ideas. This idea is relevant to both the Foundation and Higher Tiers.

I believe the key issue comes with the adjustment of the Assessment Objectives – especially AO2 – where students are expected to reason, interpret and communicate mathematically. Can my students make deductions, inferences and draw conclusions; can they construct chains of reasoning; can they assess the validity of an argument?

The new maths GCSE syllabus is so expansive that instead of being linear, it effectively becomes spiral, as topics need to be revisited and new depth added. Why not, at regular intervals of once every half-term, devote 4 or 5 lessons (3 to 4 hours) aimed at pulling together a number of previously taught topics in open-ended, investigative work?

One suggestion I offered was sports related. I have a Y11 class at the moment who are all boys, with nearly all of them involved in one sports team or another and some of them doing GCSE PE. What strands and topics is it possible to combine here?

Focus on the layout of a soccer pitch, basketball court or a track and field arena, and look at area, perimeter, trigonometry, scale drawing, construction, percentages, ratio.

As a starter this would throw together:

- Area and perimeter of pitches/playing field (G14)

- Shape of elements of the playing field (G4)

- Area of elements in the playing field (centre circle, goalkeeper area, javelin/discus throwing area, … (G9, G18)

- Percentage of elements to whole area (R3, R9)

- Comparison of different dimensions of pitches, for example, as stated by the sport’s governing body (N16)

- Scale drawings of the playing surface with markings (R2, G2)

- Metric/ Imperial conversion of dimensions (N13)

- Total revenue from spectator attendance (N2), over the season/per game (S4)

- Ratio breakdown of spectators; male:female, adult:child, away:home, … (R5)

- Average attendance figures (S4, N14)

- Mean/median/mode goals/points per game/player (S4)

- Player on pitch shooting for goal/basket, angle of ball trajectory/ distance from target (G20)

- Relative sizes of volume/surface area of balls used (G17, R5, R9)

- Area of front area of the goal, area of netting required for goal/discus throwing circle (R12, G12)

- Spectator view to pitch (angle of depression)/distance, player view to stands (angle of elevation)/distance, … (G20)

This list can be extended and matched by your/ the students’ imagination. What about the cost of re-turfing the pitch: cost of turf (£/m2), labour cost, VAT payments, manpower needed, volume of water used by the sprinklers at half time, rate of flow of the water, cost, …

Students could be tasked in small groups to research data for many of the bullet points above. Sport is a fantastic generator of statistical information.

Line graphs, bar charts, pie charts and the like are all possible from the list given above: ratio of spectators, number of defenders:midfield:attackers, attendance patterns across the season, revenue per game across the season, …

I am convinced that getting students talking, engaging, collaborating, discussing and exploring is really good for vocabulary and method, and stimulates a better understanding and connection of a variety of strands. Done well, this will encourage and develop student reasoning, their ability to make inferences and deductions, and check out the validity of an argument.

Above all this kind of approach will develop confidence – a vital attribute that all our students need.

@cpstobart

March 27, 2015 / cpstobart / 0 Comments

Second of my blogs about the new Maths GCSE was published by Collins yesterday. This one is about delivering the new Higher Tier and the implications for teaching content, teaching style, learning style and mini-investigations. Have a look and see if you agree. Is it too visionary?

http://freedomtoteach.collins.co.uk/implications-new-curriculum-teach-higher-tier/

@cpstobart

March 23, 2015 / cpstobart / 0 Comments

Blog gone up this afternnon. Have a read of my thoughts on delivering the Foundation Tier. Hopefully this will, at least, stimulate some ideas and come conversations. Get involved…….

http://freedomtoteach.collins.co.uk/implications-new-curriculum-teach-foundation-tier